One Policy, Two Worlds, Many Robots

One Policy, Two Worlds, Many Robots

Abstract

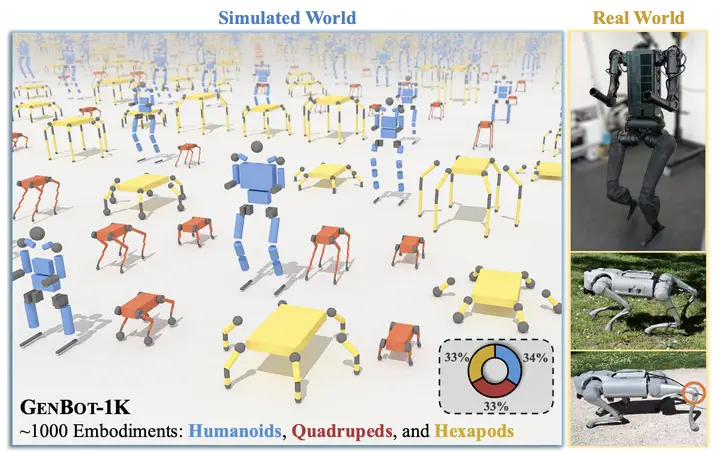

Developing generalist agents that operate across diverse tasks, environments, and robot embodiments is a grand challenge in robotics and artificial intelligence. While substantial progress has been made in cross-task and cross-environment generalization, achieving broad generalization to novel embodiments remains elusive. In this work, we study embodiment scaling laws — the hypothesis that increasing the quantity of training embodiments improves generalization to unseen ones. To explore this, we procedurally generate a dataset of 1,000 varied robot embodiments, spanning humanoids, quadrupeds, and hexapods, and train embodiment-specific reinforcement learning experts for legged locomotion. We then distill these experts into a single generalist policy capable of handling diverse observation and action spaces. Our large-scale study reveals that generalization performance improves with the number of training embodiments. Notably, a policy trained on the full dataset zero-shot transfers to diverse unseen embodiments in both simulation and real-world evaluations. These results provide preliminary empirical evidence for embodiment scaling laws and suggest that scaling up embodiment quantity may serve as a foundation for building generalist robot agents.